Cockpit AI Agent: Autonomous scenario creation becomes the first step to personalize cockpits

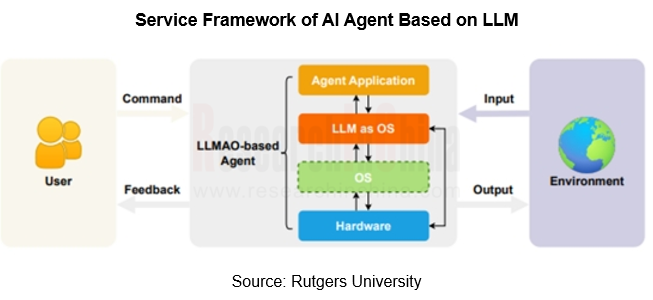

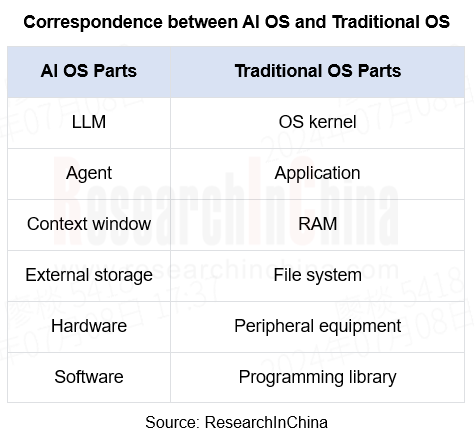

In AI Foundation Models’ Impacts on Vehicle Intelligent Design and Development Research Report, 2024, ResearchInChina mentioned that the core of an AI Agent uses a large language model (LLM) as its core computing engine (LLM OS). In the AI service framework, the LLM acts as AI core and the Agent acts as AI APP.? With the help of reasoning and generation capabilities of? AI foundation model, the Agent can create more cockpit scenarios, while further improving current multimodal interaction, voice processing and other technologies in the cockpit.

Compared with the traditional OS, AI OS is programmed in natural language, which is more suitable for human operation and convenient for customization and personalized human-computer interaction. The Agent responds to user prompts or instructions in natural language, and can perform a lot of tasks according to its interaction with physical or digital environment.

Foundation models spawn Agent customized scenarios

Since AI foundation models were installed in cars, they have been widely used in multimodal interaction scenarios. OEMs have started from voice assistants to further improve? intelligent processing of user commands:

Before foundation models got on cars, the wake-up rate and effective instructions of voice assistants in cockpits are at a low level;

After foundation models were available in cars, not only has voice recognition become more intelligent, but the application of expression monitoring and gesture control have also been improved, with effective commands and daily active rates increasing significantly.

AI foundation models further enhance the user experience of smart cockpits through strong context processing and reasoning capabilities. Please refer to the following dialogue:

(Mobility scenario)

--**Tongxue! (the name of a voice assistant)

-I am here!

-There seems to be a prairie ahead. Please take a picture for me!

-Wait a moment, and I will capture the beautiful scenariory of the prairie for you. I'll let you know when the photo is taken. Is there anything else I can help you with?

-Send it to Moments immediately. I want my friends to have a look at this beautiful scenariory.

-Ok, according to your authorized account, please choose the material and filter style.

-Just this one, with a caption.

-Ok, three captions have been generated for you. Which one do you like?

-Just this one.

(You enter the office and receive a phone call)

-There is a phone call from a customer, and I have transferred it to the IVI for you.? Would you like to answer it?

-Yes, and turn on the office mode.

-Ok, I will generate an avatar of the customer for you and start the conversation. Do you have any questions or things to discuss? I will record the conversation and provide you with the minutes of the meeting after it is over.

(The avatar is generated)

-Now you can start the conversation.

The above scenarios will not be materialized in the cockpit until 2024 when foundation models are installed on vehicles by some OEMs.

For example, IM L6 has built Carlog and City Drive scenarios to enable the AI foundation models to proactively recommend food and attractions and allow users to post them on social media:

Carlog: Actively perceive the scenario during driving through AI vision foundation model, mobilize four cameras to take photos, automatically save and edit them, and support one-click share in Moments.

City Drive: Cooperate with Volcengine to model nearby food, scenic spots and landmarks in real time in the digital screen, and push them according to users' habits and preferences.

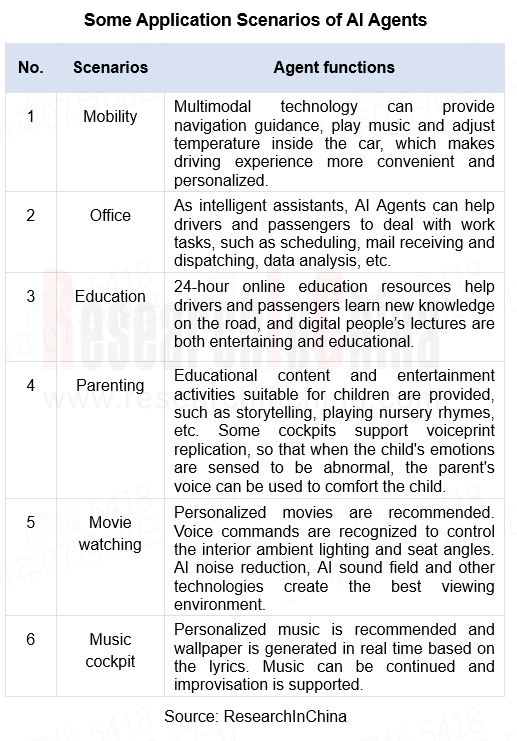

The applicability of foundation models in various scenarios has stimulated users' demand for intelligent agents that can uniformly manage cockpit functions. In 2024, OEMs such as NIO, Li Auto, and Hozon successively launched Agent frameworks, using voice assistants as the starting point to manage functions and applications in cockpits.

Agent service frameworks can not only manage cockpit functions in a unified way, but also provide more abundant scenario modes according to customers' needs and preferences, especially supporting customized scenarios for users, which accelerates the advent of the cockpit personalization era.

For example, NIO’s NOMI GPT allows users to set an AI scenario with just one sentence:

Core competence of cockpit Agents

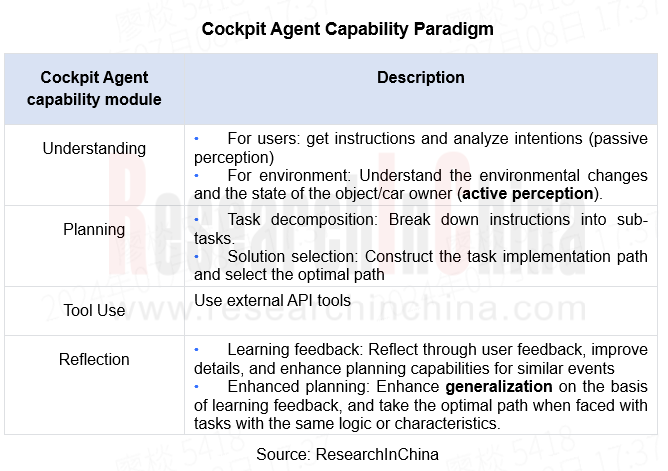

AI Agents in the era of foundation models are based on LLMs, whose powerful reasoning expands the applicable scenarios of AI Agents that can improve the thinking capability of foundation models through feedback obtained during operation. In the cockpit, the Agent capability paradigm can be roughly divided into "Understanding" + "Planning" + "Tool Use" + "Reflection".

When Agents first get on cars, cognitive and planning abilities are more important. The understanding of task goals and the choice of implementation paths directly determine the accuracy of performance results, which in turn affect the scenario utilization rate of Agents.

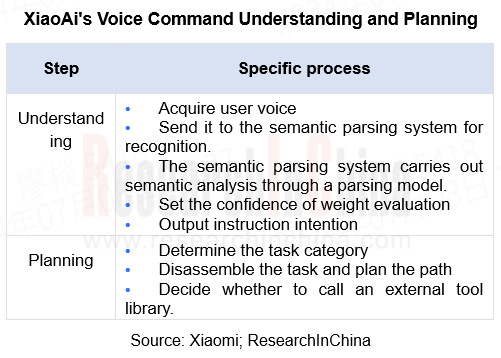

For example, in Xiaomi's voice interaction process, semantic understanding is the difficulty of the entire automotive voice processing process. XiaoAi handles semantic parsing through a semantic parsing model.

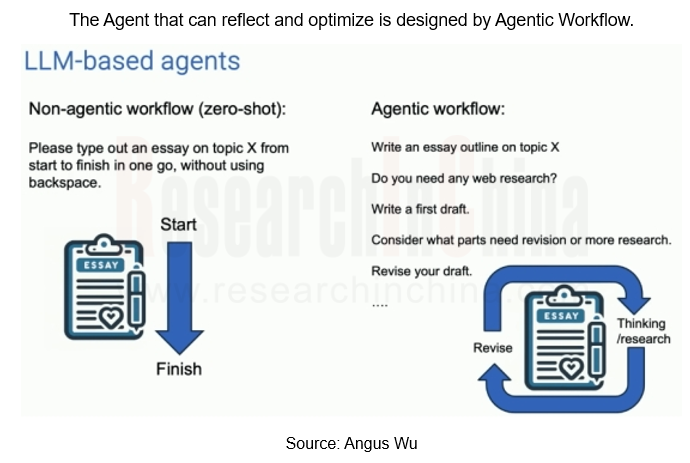

After the mass production of Agents, the personalized cockpits that support users to customize scenario modes become the highlight, and Reflection becomes the most important core competence at this stage, so it is necessary to build an Agentic Workflow that is constantly learning and optimizing.

For example, Lixiang Tongxue offered by Li Auto supports the creation of one-sentence scenarios. It is backed by Mind GPT's built-in memory network and online reinforcement learning capabilities. Mind GPT can remember personalized preferences and habits based on historical conversations. When similar scenarios recur, it can automatically set scenario parameters through historical data to fit the user's original intentions.

At the AI OS architecture setting level, we take SAIC Z-One as an example:

Z-One accesses the LLM kernel (LLM OS) at the kernel layer, which controls the interfaces of AI OS SDK and ASF with the original microkernel respectively, in which AI OS SDK receives the scheduling of the LLM to promote the Agent service framework of the application layer. The Z-One AI OS architecture highly integrates AI with CPU. Through SOA atomic services, AI is then connected to the vehicle's sensors, actuators and controllers. This architecture, based on a terminal-cloud foundation model, can enhance the computing power of the terminal-side foundation model and reduce operational latency.

Application Difficulty of Cockpit AI Agents

Agents connect to users and execute commands. In the application process, in addition to the technical difficulties of putting foundation models on cars, they also face scenario difficulties. In the process of command reception-semantic analysis-intention reasoning-task execution, the accuracy of the performance results and the delay in human-computer interaction directly affect the user's riding experience.

Humanization of interaction

For example, in the "emotional consultant" scenario, Agents should resonate emotionally with car owners and perform anthropomorphism. Generally, there are three forms of anthropomorphism of AI Agents: physical anthropomorphism, personality anthropomorphism, and emotional anthropomorphism.

NIO's NOMI GPT uses "personality anthropomorphism" and "emotional anthropomorphism":

Foundation model performance

In the "encyclopedia question and answer" scenario, Agents may be unable to answer the user's questions, especially open questions, accurately because of LLM illusion after semantic analysis, database search, answer generation and the like.

Current solutions include advanced prompting, RAG+knowledge graph, ReAct, CoT/ToT, etc., which cannot completely eliminate “LLM illusion”. In the cockpit, external databases, RAG, self-consistency and other methods are more often used to reduce the frequency of “LLM illusion”.

Some foundation model manufacturers have improved the above solutions. For example, Meta has proposed to reduce “LLM illusion” through Chain-of-Verification (CoVe). This method breaks down fact-checking into more detailed sub-questions to improve response accuracy and is consistent with the human-driven fact-checking process. It can effectively improve the FACTSCORE indicator in long-form generation tasks.

CoVe includes four steps: query, plan verification, execute verification and? final verified response.

Next-generation Central and Zonal Communication Network Topology and Chip Industry Research Report, 2025

The automotive E/E architecture is evolving towards a "central computing + zonal control" architecture, where the central computing platform is responsible for high-computing-power tasks, and zonal co...

Vehicle-road-cloud Integration and C-V2X Industry Research Report, 2025

Vehicle-side C-V2X Application Scenarios: Transition from R16 to R17, Providing a Communication Base for High-level Autonomous Driving, with the C-V2X On-board Explosion Period Approaching

In 2024, t...

Intelligent Cockpit Patent Analysis Report, 2025

Patent Trend: Three Major Directions of Intelligent Cockpits in 2025

This report explores the development trends of cutting-edge intelligent cockpits from the perspective of patents. The research sco...

Smart Car Information Security (Cybersecurity and Data Security) Research Report, 2025

Research on Automotive Information Security: AI Fusion Intelligent Protection and Ecological Collaboration Ensure Cybersecurity and Data Security

At present, what are the security risks faced by inte...

New Energy Vehicle 800-1000V High-Voltage Architecture and Supply Chain Research Report, 2025

Research on 800-1000V Architecture: to be installed in over 7 million vehicles in 2030, marking the arrival of the era of full-domain high voltage and megawatt supercharging.

In 2025, the 800-1000V h...

Foreign Tier 1 ADAS Suppliers Industry Research Report 2025

Research on Overseas Tier 1 ADAS Suppliers: Three Paths for Foreign Enterprises to Transfer to NOA

Foreign Tier 1 ADAS suppliers are obviously lagging behind in the field of NOA.

In 2024, Aptiv (2.6...

VLA Large Model Applications in Automotive and Robotics Research Report, 2025

ResearchInChina releases "VLA Large Model Applications in Automotive and Robotics Research Report, 2025": The report summarizes and analyzes the technical origin, development stages, application cases...

OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025

ResearchInChina releases the "OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025", which sorts out iterative development context of mainstream automakers in terms of infota...

Autonomous Driving SoC Research Report, 2025

High-level intelligent driving penetration continues to increase, with large-scale upgrading of intelligent driving SoC in 2025

In 2024, the total sales volume of domestic passenger cars in China was...

China Passenger Car HUD Industry Report, 2025

ResearchInChina released the "China Passenger Car HUD Industry Report, 2025", which sorts out the HUD installation situation, the dynamics of upstream, midstream and downstream manufacturers in the HU...

ADAS and Autonomous Driving Tier 1 Suppliers Research Report, 2025 – Chinese Companies

ADAS and Autonomous Driving Tier 1 Suppliers Research Report, 2025 – Chinese Companies

Research on Domestic ADAS Tier 1 Suppliers: Seven Development Trends in the Era of Assisted Driving 2.0

In the ...

Automotive ADAS Camera Report, 2025

①In terms of the amount of installed data, installations of side-view cameras maintain a growth rate of over 90%From January to May 2025, ADAS cameras (statistical scope: front-view, side-view, surrou...

Body (Zone) Domain Controller and Chip Industry Research Report,2025

Body (Zone) Domain Research: ZCU Installation Exceeds 2 Million Units, Evolving Towards a "Plug-and-Play" Modular Platform

The body (zone) domain covers BCM (Body Control Module), BDC (Body Dom...

Automotive Cockpit Domain Controller Research Report, 2025

Cockpit domain controller research: three cockpit domain controller architectures for AI Three layout solutions for cockpit domain controllers for deep AI empowerment

As intelligent cockpit tran...

China Passenger Car Electronic Control Suspension Industry Research Report, 2025

Electronic control suspension research: air springs evolve from single chamber to dual chambers, CDC evolves from single valve to dual valves

ResearchInChina released "China Passenger Car Elect...

Automotive XR Industry Report, 2025

Automotive XR industry research: automotive XR application is still in its infancy, and some OEMs have already made forward-looking layout

The Automotive XR Industry Report, 2025, re...

Intelligent Driving Simulation and World Model Research Report, 2025

1. The world model brings innovation to intelligent driving simulation

In the advancement towards L3 and higher-level autonomous driving, the development of end-to-end technology has raised higher re...

Autonomous Driving Map (HD/LD/SD MAP, Online Reconstruction, Real-time Generative Map) Industry Report 2025

Research on Autonomous Driving Maps: Evolve from Recording the Past to Previewing the Future with "Real-time Generative Maps"

"Mapless NOA" has become the mainstream solution for autonomous driving s...