End-to-end intelligent driving research: How Li Auto becomes a leader from an intelligent driving follower

There are two types of end-to-end autonomous driving: global (one-stage) and segmented (two-stage) types. The former has a clear concept, and much lower R&D cost than the latter, because it does not require any manually annotated data sets but relies on multimodal foundation models developed by Google, META, Alibaba and OpenAI. Standing on the shoulders of these technology giants, the performance of global end-to-end autonomous driving is much better than segmented end-to-end autonomous driving, but at extremely high deployment cost.

Segmented end-to-end autonomous driving still uses the traditional CNN backbone network to extract features for perception, and adopts end-to-end path planning. Although its performance is not as good as global end-to-end autonomous driving, it has lower deployment cost. However, the deployment cost of segmented end-to-end autonomous driving is still very high compared with the current mainstream traditional “BEV+OCC+decision tree” solution.

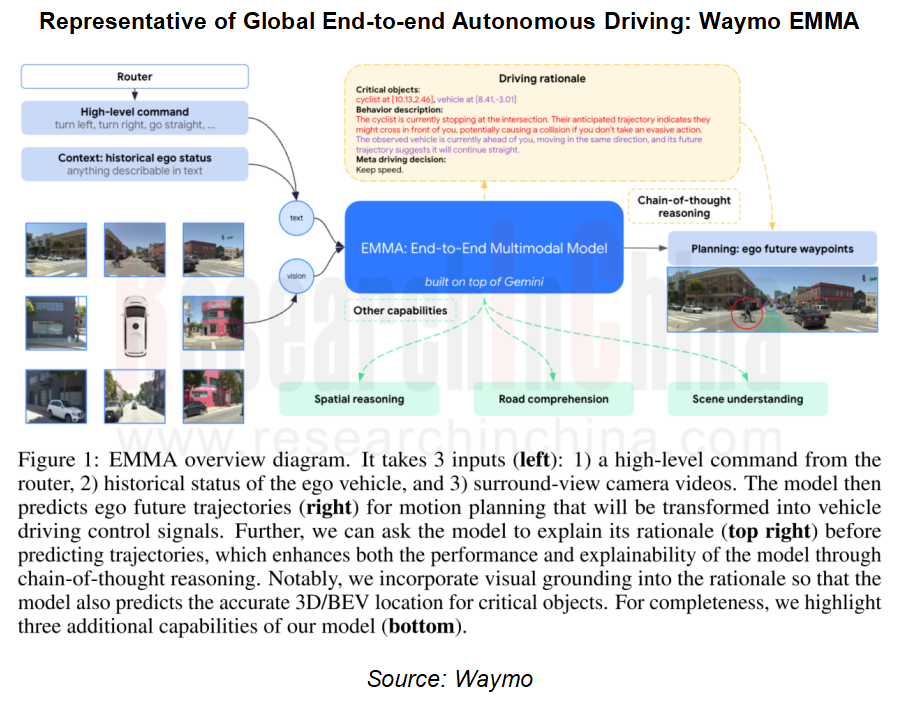

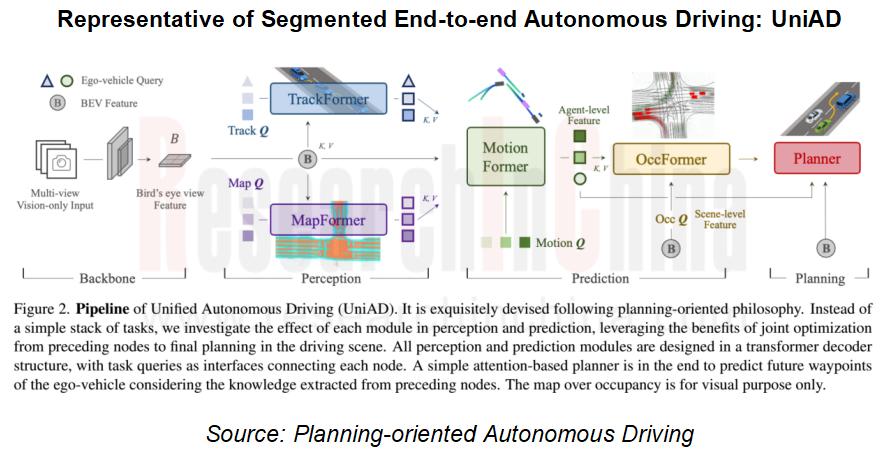

As a representative of global end-to-end autonomous driving, Waymo EMMA directly inputs videos without a backbone network but with a multimodal foundation model as the core. UniAD is a representative of segmented end-to-end autonomous driving.

Based on whether feedback can be obtained, end-to-end autonomous driving researches are mainly divided into two categories: the research is conducted in simulators such as CARLA, and the next planned instructions can be actually performed; the research based on collected real data, mainly imitation learning, referring to UniAD. End-to-end autonomous driving currently features an open loop, so it is impossible to truly see the effects of the execution of one's own predicted instructions. Without feedback, the evaluation of open-loop autonomous driving is very limited. The two indicators commonly used in documents include L2 distance and collision rate.

L2 distance: The L2 distance between the predicted trajectory and the true trajectory is calculated to judge the quality of the predicted trajectory.

Collision rate: The probability of collision between the predicted trajectory and other objects is calculated to evaluate the safety of the predicted trajectory.

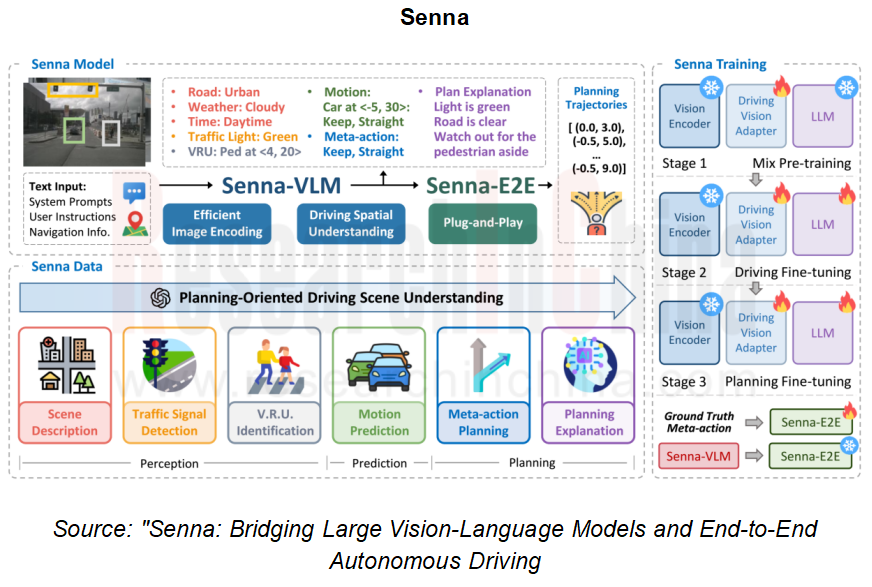

The most attractive thing about end-to-end autonomous driving is the potential for performance improvement. The earliest end-to-end solution is UniAD. A paper at the end of 2022 revealed that the L2 distance was as long as 1.03 meters. It was greatly reduced to 0.55 meters at the end of 2023 and further to 0.22 meters in late 2024. Horizon Robotics is one of the most active companies in the end-to-end field, and its technology development also shows the overall evolution of the end-to-end route. After UniAD came out, Horizon Robotics immediately proposed VAD whose concept is similar to that of UniAD with much better performance. Then, Horizon Robotics turned to global end-to-end autonomous driving. Its first result was HE-Driver, which had a relatively large number of parameters. The following Senna has a smaller number of parameters and is also one of the best-performing end-to-end solutions.

The core of some end-to-end systems is still BEVFormer which uses vehicle CAN bus information by default, including explicit information related to the vehicle's speed, acceleration and steering angle, exerting a significant impact on path planning. These end-to-end systems still require supervised training, so massive manual annotations are indispensable, which makes the data cost very high. Furthermore, since the concept of GPT is borrowed, why not use LLM directly? In this case, Li Auto proposed DriveVLM.

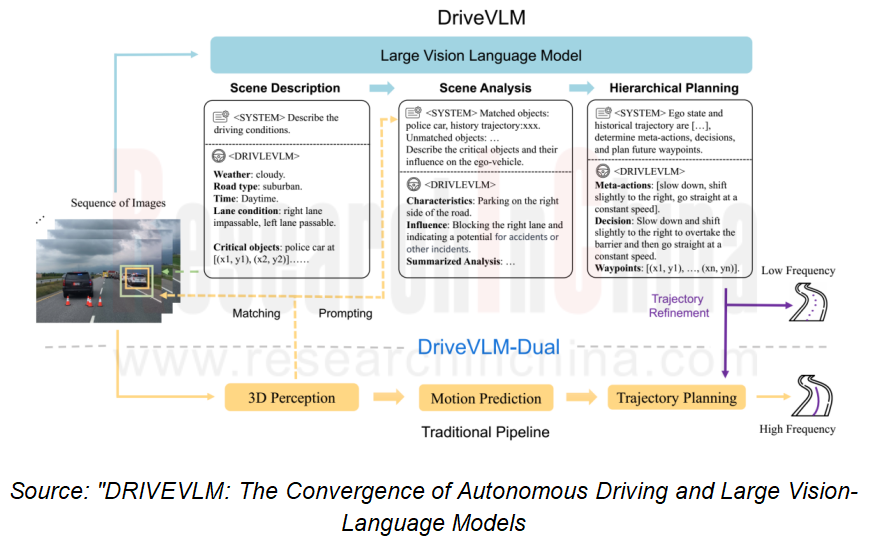

As the figure below shows, the pipeline of DriveVLM from Li Auto mainly involves design of the three major modules: scenario description, scenario analysis, and hierarchical planning.

The scenario description module of DriveVLM is composed of environment description and key object recognition. Environment description focuses on common driving environments such as weather and road conditions. Key object recognition is to find key objects that have a greater impact on current driving decision. Environment description includes the following four parts: weather, time, road type, and lane line.

Differing from the traditional autonomous driving perception module that detects all objects, DriveVLM focuses on recognizing key objects in the current driving scenario that are most likely to affect autonomous driving decision, because detecting all objects will consume enormous computing power. Thanks to the pre-training of the massive autonomous driving data accumulated by Li Auto and the open source foundation model, VLM can better detect key long-tail objects, such as road debris or unusual animals, than traditional 3D object detectors.

For each key object, DriveVLM will output its semantic category (c) and the corresponding 2D object box (b) respectively. Pre-training comes from the field of NLP foundation models, because NLP uses very little annotated data and is very expensive. Pre-training first uses massive unannotated data for training to find language structure features, and then takes prompts as labels to solve specific downstream tasks by fine-tuning.

DriveVLM completely abandons the traditional algorithm BEVFormer as the core but adopts large multimodal models. Li Auto's DriveVLM leverages Alibaba's foundation model Qwen-VL with up to 9.7 billion parameters, 448*448 input resolution, and NVIDIA Orin for inference operations.

How does Li Auto transform from a high-level intelligent driving follower into a leader?

At the beginning of 2023, Li Auto was still a laggard in the NOA arena. It began to devote itself to R&D of high-level autonomous driving in 2023, accomplished multiple NOA version upgrades in 2024, and launched all-scenario autonomous driving from parking space to parking space in late November 2024, thus becoming a leader in mass production of high-level intelligent driving (NOA).

Reviewing the development history of Li Auto's end-to-end intelligent driving, in addition to the data from its own hundreds of thousands of users, it also partnered with a number of partners on R&D of end-to-end models. DriveVLM is the result of the cooperation between Li Auto and Tsinghua University.

In addition to DriveVLM, Li Auto also launched STR2 with Shanghai Qi Zhi Institute, Fudan University, etc., proposed DriveDreamer4D with GigaStudio, the Institute of Automation of Chinese Academy of Sciences, and unveiled MoE with Tsinghua University.

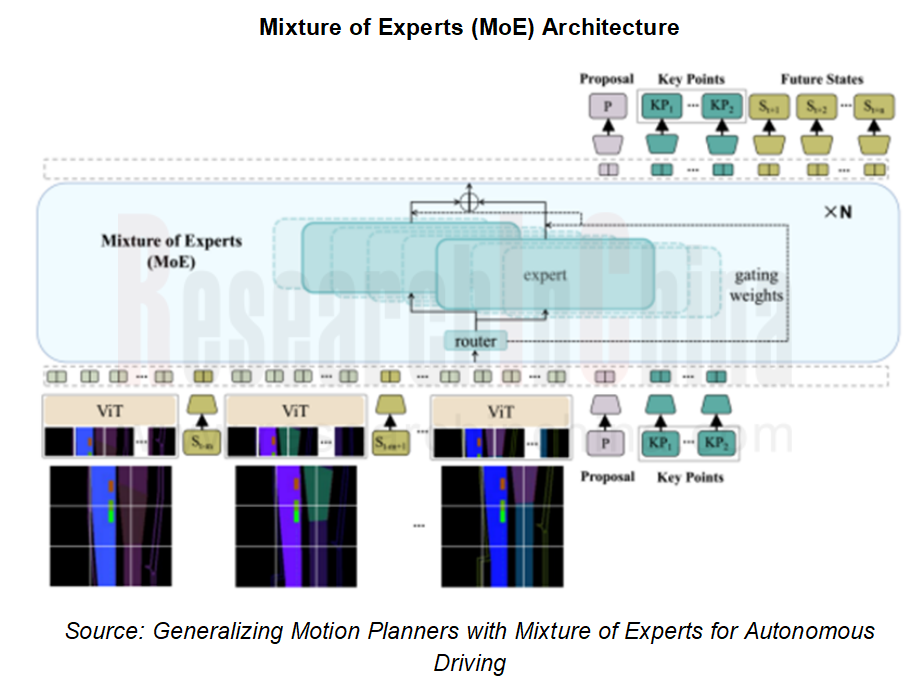

Mixture of Experts (MoE) Architecture

In order to solve the problem of too many parameters and too much calculation in foundation models, Li Auto has cooperated with Tsinghua University to adopt MoE Architecture. Mixture of Experts (MoE) is an integrated learning method that combines multiple specialized sub-models (i.e. "experts") to form a complete model. Each "expert" makes contributions in the field in which it is good at. The mechanism that determines which "expert" participates in answering a specific question is called a "gated network". Each expert model can focus on solving a specific sub-problem, and the overall model can achieve better performance in complex tasks. MoE is suitable for processing considerable datasets and can effectively cope with the challenges of massive data and complex features. That’s because it can handle different sub-tasks in parallel, make full use of computing resources, and improve the training and reasoning efficiency of models.

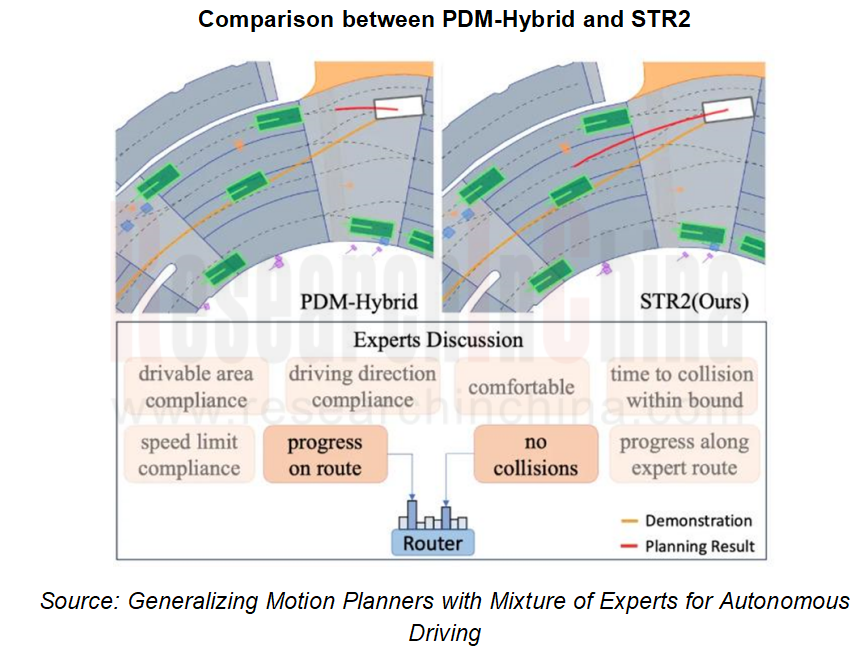

STR2 Path Planner

STR2 is a motion planning solution based on Vision Transformer (ViT) and MoE. It was developed by Li Auto and researchers from Shanghai Qi Zhi Research Institute, Fudan University and other universities and institutions.

STR2 is designed specifically for the autonomous driving field to improve generalization capabilities in complex and rare traffic conditions.

STR2 is an advanced motion planner that enables deep learning and effective planning of complex traffic environments by combining a Vision Transformer (ViT) encoder and MoE causal transformer architecture.

The core idea of STR2 is to wield MoE to handle modality collapse and reward balance through expert routing during training, thereby improving the model's generalization capabilities in unknown or rare situations.

DriveDreamer4D World Model

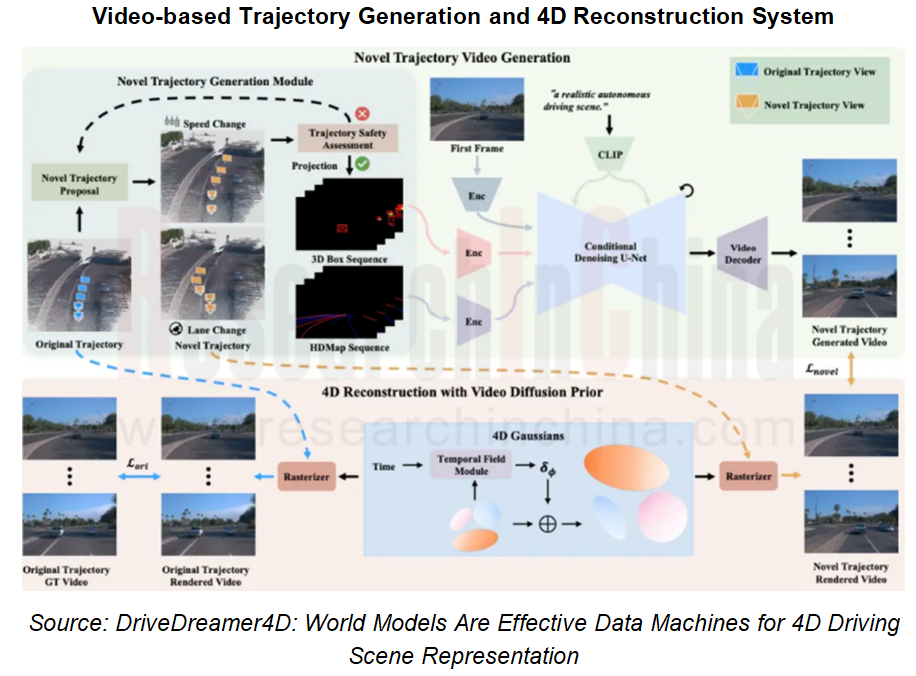

In late October 2024, GigaStudio teamed up with the Institute of Automation of Chinese Academy of Sciences, Li Auto, Peking University, Technical University of Munich and other units to propose DriveDreamer4D.

DriveDreamer4D uses a world model as a data engine to synthesize new trajectory videos (e.g., lane change) based on real-world driving data.

DriveDreamer4D uses a world model as a data engine to synthesize new trajectory videos (e.g., lane change) based on real-world driving data.

DriveDreamer4D can also provide rich and diverse perspective data (lane change, acceleration and deceleration, etc.) for driving scenarios to increase closed-loop simulation capabilities in dynamic driving scenarios.

DriveDreamer4D can also provide rich and diverse perspective data (lane change, acceleration and deceleration, etc.) for driving scenarios to increase closed-loop simulation capabilities in dynamic driving scenarios.

The overall structure diagram is shown in the figure. The novel trajectory generation module (NTGM) adjusts the original trajectory actions, such as steering angle and speed, to generate new trajectories. These new trajectories provide a new perspective for extracting structured information (e.g., vehicle 3D boxes and background lane line details).

The overall structure diagram is shown in the figure. The novel trajectory generation module (NTGM) adjusts the original trajectory actions, such as steering angle and speed, to generate new trajectories. These new trajectories provide a new perspective for extracting structured information (e.g., vehicle 3D boxes and background lane line details).

Subsequently, based on the video generation capabilities of the world model and the structured information obtained by updating the trajectories, videos of new trajectories can be synthesized. Finally, the original trajectory videos are combined with the new trajectory videos to optimize the 4DGS model.

Subsequently, based on the video generation capabilities of the world model and the structured information obtained by updating the trajectories, videos of new trajectories can be synthesized. Finally, the original trajectory videos are combined with the new trajectory videos to optimize the 4DGS model.

Autonomous Driving Domain Controller and Central Computing Unit (CCU) Industry Report, 2025

Research on Autonomous Driving Domain Controllers: Monthly Penetration Rate Exceeded 30% for the First Time, and 700T+ Ultrahigh-compute Domain Controller Products Are Rapidly Installed in Vehicles

L...

China Automotive Lighting and Ambient Lighting System Research Report, 2025

Automotive Lighting System Research: In 2025H1, Autonomous Driving System (ADS) Marker Lamps Saw an 11-Fold Year-on-Year Growth and the Installation Rate of Automotive LED Lighting Approached 90...

Ecological Domain and Automotive Hardware Expansion Research Report, 2025

ResearchInChina has released the Ecological Domain and Automotive Hardware Expansion Research Report, 2025, which delves into the application of various automotive extended hardware, supplier ecologic...

Automotive Seating Innovation Technology Trend Research Report, 2025

Automotive Seating Research: With Popularization of Comfort Functions, How to Properly "Stack Functions" for Seating?

This report studies the status quo of seating technologies and functions in aspe...

Research Report on Chinese Suppliers’ Overseas Layout of Intelligent Driving, 2025

Research on Overseas Layout of Intelligent Driving: There Are Multiple Challenges in Overseas Layout, and Light-Asset Cooperation with Foreign Suppliers Emerges as the Optimal Solution at Present

20...

High-Voltage Power Supply in New Energy Vehicle (BMS, BDU, Relay, Integrated Battery Box) Research Report, 2025

The high-voltage power supply system is a core component of new energy vehicles. The battery pack serves as the central energy source, with the capacity of power battery affecting the vehicle's range,...

Automotive Radio Frequency System-on-Chip (RF SoC) and Module Research Report, 2025

Automotive RF SoC Research: The Pace of Introducing "Nerve Endings" such as UWB, NTN Satellite Communication, NearLink, and WIFI into Intelligent Vehicles Quickens

RF SoC (Radio Frequency Syst...

Automotive Power Management ICs and Signal Chain Chips Industry Research Report, 2025

Analog chips are used to process continuous analog signals from the natural world, such as light, sound, electricity/magnetism, position/speed/acceleration, and temperature. They are mainly composed o...

Global and China Electronic Rearview Mirror Industry Report, 2025

Based on the installation location, electronic rearview mirrors can be divided into electronic interior rearview mirrors (i.e., streaming media rearview mirrors) and electronic exterior rearview mirro...

Intelligent Cockpit Tier 1 Supplier Research Report, 2025 (Chinese Companies)

Intelligent Cockpit Tier1 Suppliers Research: Emerging AI Cockpit Products Fuel Layout of Full-Scenario Cockpit Ecosystem

This report mainly analyzes the current layout, innovative products, and deve...

Next-generation Central and Zonal Communication Network Topology and Chip Industry Research Report, 2025

The automotive E/E architecture is evolving towards a "central computing + zonal control" architecture, where the central computing platform is responsible for high-computing-power tasks, and zonal co...

Vehicle-road-cloud Integration and C-V2X Industry Research Report, 2025

Vehicle-side C-V2X Application Scenarios: Transition from R16 to R17, Providing a Communication Base for High-level Autonomous Driving, with the C-V2X On-board Explosion Period Approaching

In 2024, t...

Intelligent Cockpit Patent Analysis Report, 2025

Patent Trend: Three Major Directions of Intelligent Cockpits in 2025

This report explores the development trends of cutting-edge intelligent cockpits from the perspective of patents. The research sco...

Smart Car Information Security (Cybersecurity and Data Security) Research Report, 2025

Research on Automotive Information Security: AI Fusion Intelligent Protection and Ecological Collaboration Ensure Cybersecurity and Data Security

At present, what are the security risks faced by inte...

New Energy Vehicle 800-1000V High-Voltage Architecture and Supply Chain Research Report, 2025

Research on 800-1000V Architecture: to be installed in over 7 million vehicles in 2030, marking the arrival of the era of full-domain high voltage and megawatt supercharging.

In 2025, the 800-1000V h...

Foreign Tier 1 ADAS Suppliers Industry Research Report 2025

Research on Overseas Tier 1 ADAS Suppliers: Three Paths for Foreign Enterprises to Transfer to NOA

Foreign Tier 1 ADAS suppliers are obviously lagging behind in the field of NOA.

In 2024, Aptiv (2.6...

VLA Large Model Applications in Automotive and Robotics Research Report, 2025

ResearchInChina releases "VLA Large Model Applications in Automotive and Robotics Research Report, 2025": The report summarizes and analyzes the technical origin, development stages, application cases...

OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025

ResearchInChina releases the "OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025", which sorts out iterative development context of mainstream automakers in terms of infota...