Research Report on Automotive Memory Chip Industry and Its Impact on Foundation Models, 2025

Research on automotive memory chips: driven by foundation models, performance requirements and costs of automotive memory chips are greatly improved.

From 2D+CNN small models to BEV+Transformer foundation models, the number of model parameters has soared, making memory a performance bottleneck.

The global automotive memory chip market is expected to be worth over USD17 billion in 2030, compared with about USD4.3 billion in 2023, with a CAGR up to 22% during the period. Automotive memory chips took an 8.2% share in automotive semiconductor value in 2023, a figured projected to rise to 17.4% in 2030, indicating a substantial increase in memory chip costs.

The main driver for the development of the automotive memory chip industry lies in the rapid rise of automotive LLMs. From the previous 2D+CNN small models to BEV+Transformer foundation models, the number of model parameters has significantly increased, leading to a surge in computing demands. CNN models typically have fewer than 10 million parameters, while foundation models (LLMs) generally range from 7 billion to 200 billion parameters. Even after distillation, automotive models can still have billions of parameters.

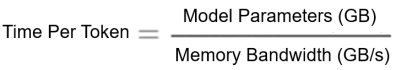

From a computing perspective, in BEV+Transformer foundation models, typically those with LLaMA decoder architecture, the Softmax operator plays a core role. Its weaker parallelization capability than that of traditional convolution operators makes memory the bottleneck. Especially memory-intensive models like GPT pose high requirements for memory bandwidth, and common autonomous driving SoCs on market often face the problem of "memory wall".

End-to-end essentially embeds a small LLM. With the increasing amount of data fed, the parameters of the foundation model will continue to grow. The initial model size is around 10 billion parameters, and through continuous iteration, it will eventually exceed 100 billion.

On April 15, 2025, at its AI sharing event, XPeng disclosed for the first time that it is developing XPeng World Foundation Model, a 72-billion-parameter ultra-large autonomous driving model. XPeng's experimental results show that the scaling law effect is evident in models with 1 billion, 3 billion, 7 billion, and 72 billion parameters: the larger the parameter scale, the greater the model's capabilities. For models of the same size, the more training data, the greater the model's performance.

The main bottleneck in multimodal model training is not only GPUs but also the efficiency of data access. XPeng has independently developed underlying data infrastructure (Data Infra), increasing data upload capacity by 22 times, and data bandwidth by 15 times in training. By optimizing both GPU/CPU and network I/O, the model training speed has been improved by 5 times. Currently, XPeng uses up to 20 million video clips to train its foundation model, a figure that will increase to 200 million this year.

In the future, XPeng will deploy the "XPeng World Foundation Model" to vehicles by distilling small models over the cloud. The parameter scale of automotive foundation models will only continue to grow, posing significant challenges to computing chips and memory. To address this, XPeng has self-developed Turing AI chip, which boasts a utilization 20% higher than general automotive high-performance chips and can handle foundation models with up to 30B (30 billion) parameters. In contrast, Li Auto's current VLM (Vision-Language Model) has about 2.2 billion parameters.

More model parameters often come with higher inference latency. How to solve the latency problem is crucial. It is expected that the Turing AI chip may offer big improvements in memory bandwidth through multi-channel design or advanced packaging technology, so as to support the local operation of 30B-parameter foundation models.

Memory bandwidth determines the upper limit of inference computing speed. LPDDR5X is widely adopted but still falls short. GDDR7 and HBM may be put on the agenda.

Memory bandwidth determines the upper limit of inference computing speed. Assuming a foundation model has 7 billion parameters, at INT8 precision for automotive use, it occupies 7GB of storage. Tesla's first-generation FSD chip has memory bandwidth of 63.5GB/s, meaning it generates one token every 110 milliseconds, with a frame rate of lower than 10Hz, compared with the typical image frame rate of 30Hz in the autonomous driving field. Nvidia Orin with memory bandwidth of 204.5GB/s generates one token every 34 milliseconds (7GB ÷ 204.5GB/s = 0.0343s, about 34ms), barely reaching 30Hz (frame rate = 1 ÷ 0.0343s = 29Hz). Noticeably this only accounts for the time required for data transfer, completely ignoring the time for actual computation, so the real speed will be much lower than the data.

DRAM Selection Path (1): LPDDR5X will be widely adopted, and the LPDDR6 standard is still being formulated.

Apart from Tesla, all current automotive chips only support up to LPDDR5. The next step for the industry is to promote LPDDR5X. For example, Micron has launched a LPDDR5X + DLEP DRAM automotive solution, which has passed ISO26262 ASIL-D certification and meets critical automotive FuSa requirements.

Nvidia Thor-X already supports automotive LPDDR5X, with memory bandwidth increased to 273GB/s. It supports the LPDDR5X standard and PCIe 5.0 interface. Thor-X-Super has an astonishing memory bandwidth of 546GB/s, and utilizes 512-bit wide LPDDR5X memory to ensure extremely high data throughput. In reality, the Super version, like Apple's chip series, simply integrates two X chips into one package, but it is not expected to enter mass production in the short term.

Thor has multiple versions, with five currently known: ① Thor-Super, with 2000T computing power; ② Thor-X, with 1000T computing power; ③ Thor-S, with 700T computing power; ④ Thor-U, with 500T computing power; ⑤ Thor-Z, with 300T computing power. Lenovo's first Thor central computing unit in the world plans to adopt dual Thor-X chips.

Micron 9600MTPS LPDDR5X already has samples, targeting mobile devices, with no automotive-grade products available yet. Samsung's new LPDDR5X product, K3KL9L90DM-MHCU, empowers high performance from PCs, servers, vehicles, to emerging on-device AI applications. It delivers speeds 1.25 times faster and 25% better power efficiency compared to the previous generation, and has a maximum operating temperature of 105°C. Mass production started in early 2025. A single K3KL9L90DM-MHCU features 8GB and x32 bus, eight chips totaling 64GB.

As LPDDR5X gradually enters the era of 9600Mbps or even 10Gbps, JEDEC has started developing the next-generation LPDDR6 standard, targeting 6G communications, L4 autonomous driving, and immersive AR/VR scenarios. LPDDR6, as the next-generation memory technology, is expected to have a rate of over 10.7Gbps, even possibly up to 14.4Gbps, with improvements in both bandwidth and energy efficiency - 50% better than the current LPDDR5X. However, mass production of LPDDR6 memory may not occur until 2026. Qualcomm's next-generation flagship chip, Snapdragon 8 Elite Gen 2 (codenamed SM8850), will support LPDDR6. Automotive LPDDR6 may take even longer to arrive.

DRAM Selection Path (2): GDDR6 is already installed in vehicles but faces cost and power consumption issues. A GDDR7+LPDDR5X hybrid memory architecture may be viable.

Aside from LPDDR5X, another path is GDDR6 or GDDR7. Tesla’s second-gen FSD chip already supports first-gen GDDR6. HW4.0 uses 32GB GDDR6 (model: MT61M512M32KPA-14) running at 1750MHz (the minimum LPDDR5 frequency is also above 3200MHz). Since it is the first-gen GDDR6, its speed is relatively low. Even with GDDR6, running 10 billion-parameter foundation models smoothly remains unfeasible, though it’s currently the best available.

Tesla’s third-gen FSD chip is likely under development and may be completed in late 2025, with support for at least GDDR6X.

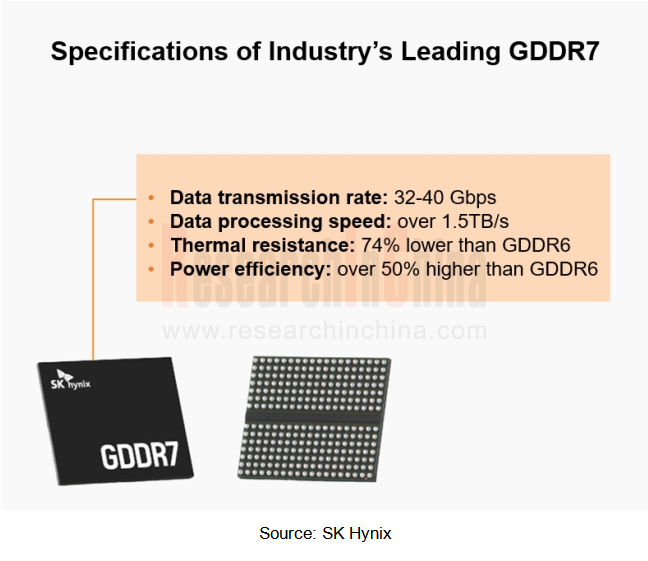

The next-generation GDDR7 standard was officially released in March 2024, but Samsung had already unveiled the world’s first GDDR7 in July 2023. Currently, both SK Hynix and Micron have introduced GDDR7 products. GDDR requires a special physical layer and controllers, and chips must have a built-in GDDR physical layer and controllers to use GDDR. Companies like Rambus and Synopsys sell relevant IPs.

Future autonomous driving chips may adopt hybrid memory architecture, for example, use GDDR7 for processing high-load AI tasks and LPDDR5X for low-power general computing, balancing performance and cost.

DRAM Selection Path (3): HBM2E is already deployed in L4 Robotaxis but remains far from production passenger cars. Memory chip vendors are working on migration of HBM technology from data centers to edge devices.

High bandwidth memory (HBM) is primarily used in servers. Stacking SDRAM using TSV technology increases not only the cost of the memory itself, but also the cost of TSMC's CoWoS process. Currently CoWoS capacity is tight and expensive. HBM has a much higher price than LPDDR5X, LPDDR5, and LPDDR4X commonly used in production passenger cars, and is not economical.

SK Hynix’s HBM2E is being exclusively used in Waymo’s L4 Robotaxis, offering 8GB capacity, transmission rate of 3.2Gbps, and impressive bandwidth of 410GB/s, setting a new industry benchmark.

SK Hynix is currently the only vendor capable of supplying HBMs that meet stringent AEC-Q automotive standards. SK Hynix is actively collaborating with autonomous driving solution giants like NVIDIA and Tesla to expand HBM applications from AI data centers to intelligent vehicles.

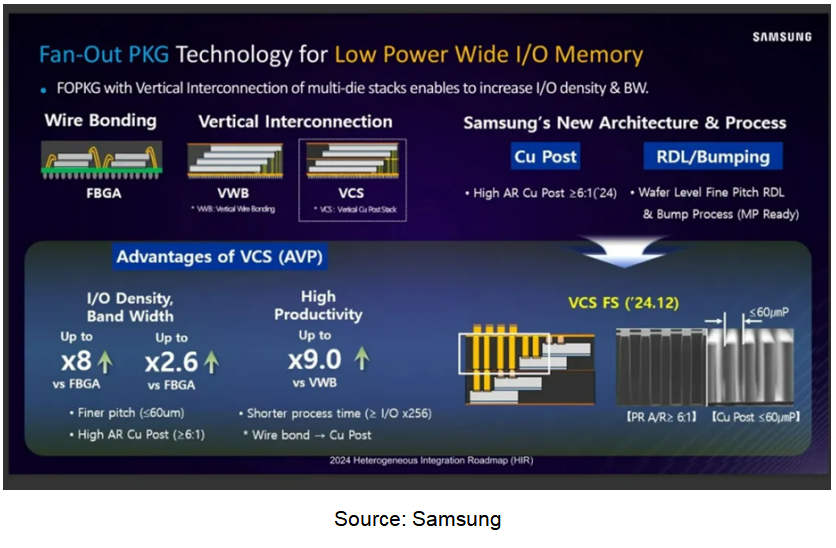

Both SK Hynix and Samsung are working to migrate HBM from data centers to edge devices like smartphones and cars. Adoption of HBMs in mobile devices will focus on improving edge AI performance and low-power design, driven by technological innovation and industry chain synergy. Cost and yield remain the primary short-term challenges, mainly involving HBM production process improvement.

Key Differences: Traditional data center HBM is a "high bandwidth, high power consumption" solution designed for high-performance computing, while on-device HBM is a "moderate bandwidth, low power consumption" solution tailored for mobile devices.

Technology Path: Traditional data center HBM relies on TSV and interposers, whereas on-device HBM achieves performance breakthroughs through packaging innovations (e.g., vertical wire bonding) and low-power DRAM technology.

For example, Samsung’s LPW DRAM (Low-Power Wide I/O DRAM) uses similar technology, offering low latency and up to 128GB/s bandwidth while consuming only 1.2pJ/b. It is expected to enter mass production during 2025-2026.

LPW DRAM significantly increases I/O interfaces by stacking LPDDR DRAM to achieve the dual goals of improving performance and reducing power consumption. Its bandwidth can exceed 200GB/s, 166% higher than LPDDR5X. Its power consumption is reduced to 1.9pJ/bit, 54% lower than LPDDR5X.

UFS 3.1 has already been widely adopted in vehicles and will gradually iterate to UFS 4.0 and UFS 5.0, while PCIe SSD will become the preferred choice for L3/L4 high-level autonomous vehicles.

At present, high-level autonomous vehicles generally adopt UFS 3.1 storage. As vehicle sensors and computing power advance, higher-specification data transmission solutions are imperative, and UFS 4.0 products will become one of the mainstream options in the future. UFS 3.1 offers a maximum speed of 2.9GB/s, which is dozens of times lower than SSD. The next-generation version UFS 4.0 will reach 4.2GB/s, providing higher speed while reducing power consumption by 30% compared to UFS 3.1. By 2027, UFS 5.0 is expected to arrive with speeds of around 10GB/s, still much lower than SSD, but with the advantages of controllable costs and a stable supply chain.

Given the strong demand for foundation models from both cockpit and autonomous driving, and to ensure sufficient performance headroom, SSD should be adopted instead of the current mainstream UFS (which is not fast enough) or eMMC (which is even slower). Automotive SSD uses the PCIe standard, which offers tremendous flexibility and potential. JESD312 defines the PCIe 4.0 standard, which actually includes multiple rates. 4 lanes is the lowest PCIe 4.0 standard, and 16-lane duplex can reach 64GB/s. PCIe 5.0 was released in 2019, doubling the signaling rate to 32GT/s, with x16 full-duplex bandwidth approaching 128GB/s.

Currently, both Micron and Samsung offer automotive-grade SSD. Samsung AM9C1 Series ranges from 128GB to 1TB, while Micron 4150AT Series comes in 220GB, 440GB, 900GB, and 1800GB capacities. The 220GB version is suitable for standalone cockpit or intelligent driving, while cockpit-driving integration requires at least 440GB.

Multi-port BGA SSD can serve as a centralized storage and computing unit in vehicles, connecting via multiple ports to SoCs for cockpit, ADAS, gateways, and more. It efficiently processes and stores different types of data in designated areas. Its benefit of independence ensures that non-core SoCs cannot access critical data without authorization, preventing interference, misidentification, or corruption of core SoC data. This maximizes data transmission isolation and independence and also reduces hardware cost of each SoC for vehicle storage.

For future L3/L4 high-level autonomous vehicles, PCIe 5.0 x4 + NVMe 2.0 will be the preferred choice for high-performance storage:

Ultra-high-speed transmission: Read speeds up to 14.5GB/s and write speeds up to 13.6GB/s, three times faster than UFS 4.0.

Low latency & high concurrency: Support higher queue depths (QD32+) for parallel processing of multiple data streams.

AI computing optimization: Combined with vehicle SoCs, accelerate AI inference computing to meet requirements of fully autonomous driving.

In autonomous driving applications, PCIe NVMe SSD can cache AI computing data, reducing memory access pressure and improving real-time processing capabilities. For example, Tesla’s FSD system uses a high-speed NVMe solution to store autonomous driving training data to enhance perception and decision-making efficiency.

Synopsys has already launched the world’s first automotive-grade PCIe 5.0 IP solution, which includes PCIe controller, security module, physical layer device (PHY), and verification IP, and complies with ISO 26262 and ISO/SAE 21434 standards. This means PCIe 5.0 will soon be available for automotive applications.

Autonomous Driving Domain Controller and Central Computing Unit (CCU) Industry Report, 2025

Research on Autonomous Driving Domain Controllers: Monthly Penetration Rate Exceeded 30% for the First Time, and 700T+ Ultrahigh-compute Domain Controller Products Are Rapidly Installed in Vehicles

L...

China Automotive Lighting and Ambient Lighting System Research Report, 2025

Automotive Lighting System Research: In 2025H1, Autonomous Driving System (ADS) Marker Lamps Saw an 11-Fold Year-on-Year Growth and the Installation Rate of Automotive LED Lighting Approached 90...

Ecological Domain and Automotive Hardware Expansion Research Report, 2025

ResearchInChina has released the Ecological Domain and Automotive Hardware Expansion Research Report, 2025, which delves into the application of various automotive extended hardware, supplier ecologic...

Automotive Seating Innovation Technology Trend Research Report, 2025

Automotive Seating Research: With Popularization of Comfort Functions, How to Properly "Stack Functions" for Seating?

This report studies the status quo of seating technologies and functions in aspe...

Research Report on Chinese Suppliers’ Overseas Layout of Intelligent Driving, 2025

Research on Overseas Layout of Intelligent Driving: There Are Multiple Challenges in Overseas Layout, and Light-Asset Cooperation with Foreign Suppliers Emerges as the Optimal Solution at Present

20...

High-Voltage Power Supply in New Energy Vehicle (BMS, BDU, Relay, Integrated Battery Box) Research Report, 2025

The high-voltage power supply system is a core component of new energy vehicles. The battery pack serves as the central energy source, with the capacity of power battery affecting the vehicle's range,...

Automotive Radio Frequency System-on-Chip (RF SoC) and Module Research Report, 2025

Automotive RF SoC Research: The Pace of Introducing "Nerve Endings" such as UWB, NTN Satellite Communication, NearLink, and WIFI into Intelligent Vehicles Quickens

RF SoC (Radio Frequency Syst...

Automotive Power Management ICs and Signal Chain Chips Industry Research Report, 2025

Analog chips are used to process continuous analog signals from the natural world, such as light, sound, electricity/magnetism, position/speed/acceleration, and temperature. They are mainly composed o...

Global and China Electronic Rearview Mirror Industry Report, 2025

Based on the installation location, electronic rearview mirrors can be divided into electronic interior rearview mirrors (i.e., streaming media rearview mirrors) and electronic exterior rearview mirro...

Intelligent Cockpit Tier 1 Supplier Research Report, 2025 (Chinese Companies)

Intelligent Cockpit Tier1 Suppliers Research: Emerging AI Cockpit Products Fuel Layout of Full-Scenario Cockpit Ecosystem

This report mainly analyzes the current layout, innovative products, and deve...

Next-generation Central and Zonal Communication Network Topology and Chip Industry Research Report, 2025

The automotive E/E architecture is evolving towards a "central computing + zonal control" architecture, where the central computing platform is responsible for high-computing-power tasks, and zonal co...

Vehicle-road-cloud Integration and C-V2X Industry Research Report, 2025

Vehicle-side C-V2X Application Scenarios: Transition from R16 to R17, Providing a Communication Base for High-level Autonomous Driving, with the C-V2X On-board Explosion Period Approaching

In 2024, t...

Intelligent Cockpit Patent Analysis Report, 2025

Patent Trend: Three Major Directions of Intelligent Cockpits in 2025

This report explores the development trends of cutting-edge intelligent cockpits from the perspective of patents. The research sco...

Smart Car Information Security (Cybersecurity and Data Security) Research Report, 2025

Research on Automotive Information Security: AI Fusion Intelligent Protection and Ecological Collaboration Ensure Cybersecurity and Data Security

At present, what are the security risks faced by inte...

New Energy Vehicle 800-1000V High-Voltage Architecture and Supply Chain Research Report, 2025

Research on 800-1000V Architecture: to be installed in over 7 million vehicles in 2030, marking the arrival of the era of full-domain high voltage and megawatt supercharging.

In 2025, the 800-1000V h...

Foreign Tier 1 ADAS Suppliers Industry Research Report 2025

Research on Overseas Tier 1 ADAS Suppliers: Three Paths for Foreign Enterprises to Transfer to NOA

Foreign Tier 1 ADAS suppliers are obviously lagging behind in the field of NOA.

In 2024, Aptiv (2.6...

VLA Large Model Applications in Automotive and Robotics Research Report, 2025

ResearchInChina releases "VLA Large Model Applications in Automotive and Robotics Research Report, 2025": The report summarizes and analyzes the technical origin, development stages, application cases...

OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025

ResearchInChina releases the "OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025", which sorts out iterative development context of mainstream automakers in terms of infota...