China Automotive Multimodal Interaction Development Research Report, 2022

Multimodal interaction research: more hardware entered the interaction, immersive cockpit experience is continuously enhanced

ResearchInChina's “China Automotive Multimodal Interaction Development Research Report, 2022” conducts analysis and research from three aspects: the installation status of mainstream interaction modes, the application of mainstream vehicle models’ interaction modes, and cockpit interaction solutions of suppliers.

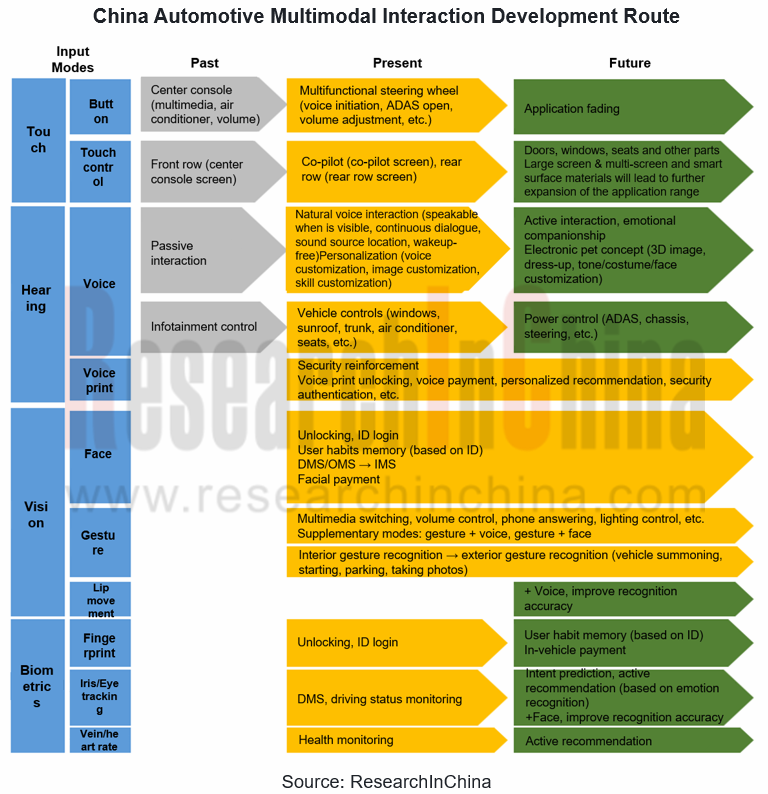

1. Guided by the "third space" concept, multimodal interaction is being deeply applied to intelligent cockpit with five main features.

(1) With the trend of large screen, multi-screen and smart surface materials, touch interaction has gradually expanded its application range

The large screen of center console makes touch control the mainstream interaction mode. For example, Mercedes-Benz EQS and XPeng P7 have almost no physical buttons on the center console, but all done by touch.

Multi-screen cockpits make touch range from the front to the rear, from the center console / co-pilot infotainment extended to the doors, windows, seats and other components. For example, Li Auto L9 eliminates the traditional instrument panel and replaces it with a small TouchBar above the steering wheel; in addition, it also carries a co-pilot screen and rear audio/video screen to achieve five-screen interaction.

(2) Voice interaction evolves from passive to active, personalized and emotional demands will be met

See-and-speak, continuous dialogue, voice source location, wake-up free and other voice technology has been widely equipped in the new cars launched in 2022, the voice interaction mode tends to be more natural.

Personalized experience is currently the focus of the voice function, and intelligent EV brands such as NIO/XPeng/Li Auto are mainly optimized in voice customization, image customization, skill customization, etc.

In the future, the concept of emotional companionship and electronic pets is expected to be realized with the help of voice function.

(3) Face recognition algorithm promotes DMS, OMS and IMS scale installation

Face recognition-based ID login and user habit presets have been implemented in NIO ET7/ET5, XPeng P7/G9, AITO M5/M7, Neta S, and Voyah Dreamer. Among them, AITO can automatically login to Huawei account through face recognition, linking schedule, navigation information, call records, music and video membership rights, third-party application data, and automatic switching of driving information to achieve information flowing.

Face payment function application is still relatively small, which has been installed on Toyota Harrier Premium Edition, and XPeng P7 will be achieved it through OTA upgrade.

Face recognition-based DMS, OMS, and IMS are being installed on a large scale and will promote in-cockpit camera equipment.

(4) Gesture recognition function is single, installed as a complementary interaction mode

Currently, gesture recognition mainly applied to multimedia switching, volume control, phone answering, lighting control, etc., mainly installed as a supplementary interaction mode.

In the future, gesture recognition is expected to be combined with ADAS functions to achieve vehicle summoning, starting and parking based on exterior visual perception.

(5) Fingerprint, iris, vein, heart rate and other biometric applications in the car are still in the exploration stage

Fingerprint is expected to be applied in user ID recording and in-vehicle payment scenarios.

Iris/eye tracking will enhance the accuracy of in-cockpit monitoring such as DMS, and have imagination space in the future in intention prediction and active recommendation.

Vein/heart rate, etc., will be applied under the concept of in-vehicle health.

2. Multimodal recognition and large & multi-screen, AR-HUD, AR/VR, ambient light, high-quality audio and other hardware interaction to be enhanced, immersive cockpit experience continues to grow

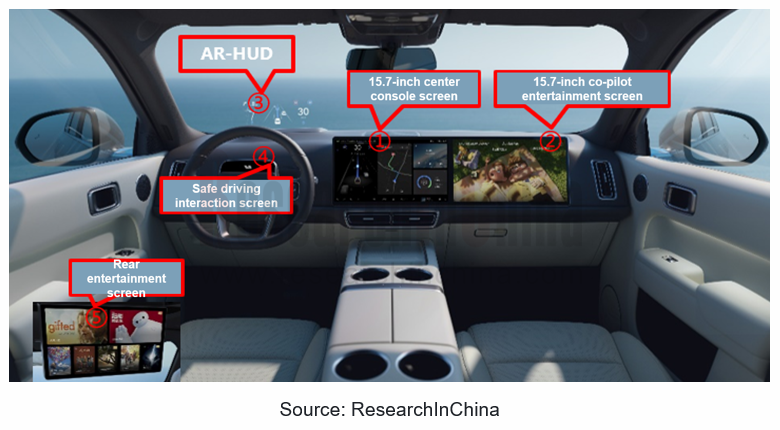

(1) Li Auto L9, creating a multimodal interaction experience through five-screen, voice and gestures

Features of Li Auto L9 interaction:

Replaces the traditional instrument screen with HUD + safe driving interaction screen.

Enhances the audiovisual experience through 15.7-inch center console screen + 15.7-inch co-pilot screen + HUD + safe driving interaction screen + 15.7-inch rear entertainment screen with 3K HD resolution and high color reproduction on the automotive screen.

6-voice-zone recognition interaction (AISpeech ) + self-developed speech engine (in cooperation with Microsoft) + 3D ToF sensor (gesture interaction, cockpit monitoring), to achieve multimodal interaction.

Voice + gesture integration, such as point at the sunshade and say "open this", that is, open the sunshade.

Connect to Nintendo Switch via Type-C and rear entertainment screen to create cockpit gaming scenarios.

Two Qualcomm Snapdragon 8155 chips, 24GB RAM + 256G high-speed storage, and dual-5G operator switching to provide computing power and network support.

(2) NIO, Li Auto, Audi and others take the lead in AR/VR glasses installation for an immersive cockpit experience

Jointly developed with NREAL, the NIO AR glasses can project a 6m viewing distance and 201-inch-equivalent screen (optional price RMB 2,299). VR glasses are jointly developed with NOLO, and equipped with ultra-thin Pancake optical lenses for binocular 4K display.

Li Auto AR glasses are provided by Leiniao Technology, a subsidiary of TCL, and the product was available on Li Auto Store from August 2022. Leiniao Air adopts the polarization Birdbath + MicroOLED technology solution and is directly connected to the rear audio and video system of Li Auto L9. Plugging the cable at the legs of the glasses into Li Auto's rear-row DP jack provides users with a 140-inch giant screen (4m distance) viewing experience.

Audi VR glasses will be first launched in USA in 2023. The hardware is supported by HTC and integrated into Holoride Pioneers' Pack in-vehicle entertainment system for games and video scenarios.

3. Chip, algorithm and system integrator work together to create active cockpit interaction based on multimodal interaction

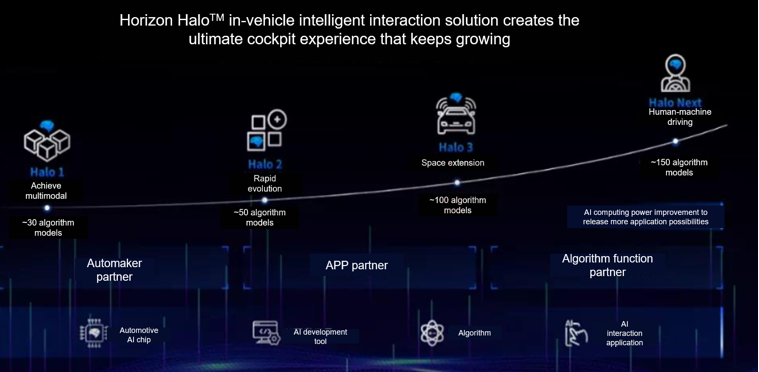

(1) Chip companies, represented by Horizon, integrate multimodal interaction into intelligent driving solution

Horizon Halo, a cockpit solution built by Horizon based on Journey 2 and Journey 3, can integrate vision, voice and other sensor data to achieve active interaction. Among them, Halo 3.0 can provide a complete set of AI solutions including DMS, face detection, behavior detection, gesture recognition, child behavior detection, multimodal voice interaction and other functions for front and rear row users.

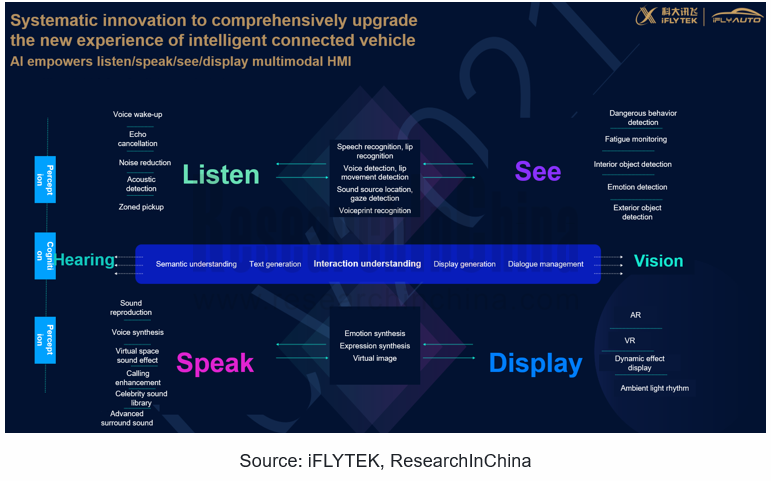

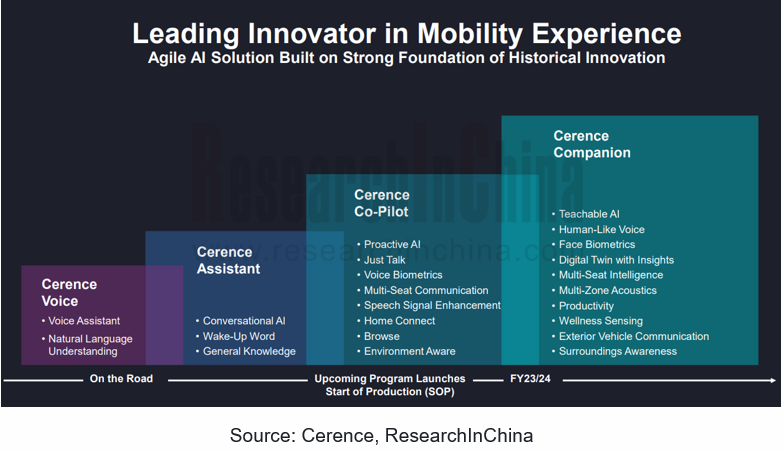

(2) iFLYTEK, Cerence, etc. entered with voice, and SenseTime, ArcSoft, etc. entered with vision to achieve the integration of superior modes with other modes to create an overall cockpit solution

iFLYTEK builds a multimodal system based on "listening, speaking, seeing and displaying" all-link technology, realizing that the vehicle can process the fusion of voice, image, live body and other information throughout the car-using cycle of getting on - driving - getting off, so as to understand passengers' information more actively and deeply, and thus actively care for them, push relevant contents/services, and change vehicle settings to explore disruptive interaction experience.

Based on its strengths in voice, Cerence will integrate vehicle data (fatigue monitoring, mobile phone interconnection, entertainment system, air conditioner, fuel, charging, seat, GPS, air quality) with in-vehicle multimodal interaction (voice, speech synthesis, text input, eye tracking, gesture recognition, emotion recognition, biometrics) to create immersive cockpit interaction in the future.

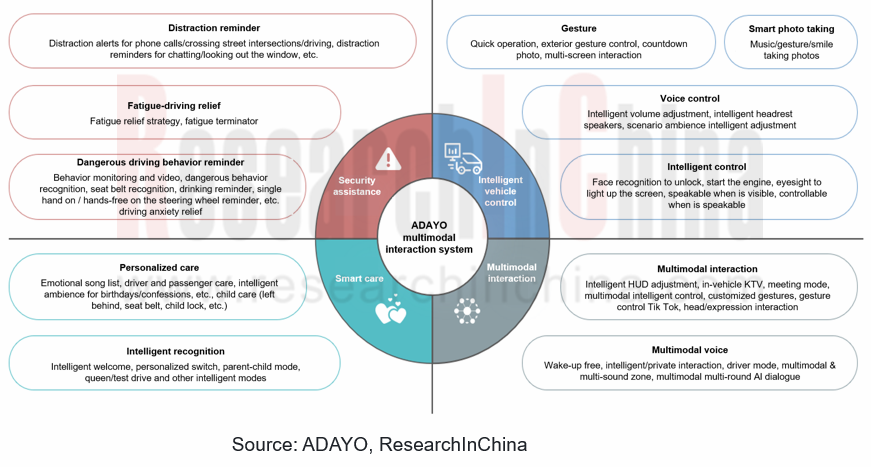

(3) ADAYO, Desay SV and other Tier1, combining multimodal interaction with scenarios to create a personalized cockpit experience

Based on high computing power AI chip, through visual, auditory, touch, multimodal front-fusion, multimodal rear-fusion and other recognition technologies, ADAYO multimodal interaction system can realize 4 major categories and 70+ human-machine interaction scenarios, including voice + gesture to open the window or air conditioner, see-and-speak, greeting when getting into the car, soothing children crying, voice control, face start engine, eye control to light up screen, driver behavior monitoring and dangerous behavior reminder, etc., providing users with active emotional multimodal interaction scenario experience and meeting the personalized needs of thousands of users.

Autonomous Driving Domain Controller and Central Computing Unit (CCU) Industry Report, 2025

Research on Autonomous Driving Domain Controllers: Monthly Penetration Rate Exceeded 30% for the First Time, and 700T+ Ultrahigh-compute Domain Controller Products Are Rapidly Installed in Vehicles

L...

China Automotive Lighting and Ambient Lighting System Research Report, 2025

Automotive Lighting System Research: In 2025H1, Autonomous Driving System (ADS) Marker Lamps Saw an 11-Fold Year-on-Year Growth and the Installation Rate of Automotive LED Lighting Approached 90...

Ecological Domain and Automotive Hardware Expansion Research Report, 2025

ResearchInChina has released the Ecological Domain and Automotive Hardware Expansion Research Report, 2025, which delves into the application of various automotive extended hardware, supplier ecologic...

Automotive Seating Innovation Technology Trend Research Report, 2025

Automotive Seating Research: With Popularization of Comfort Functions, How to Properly "Stack Functions" for Seating?

This report studies the status quo of seating technologies and functions in aspe...

Research Report on Chinese Suppliers’ Overseas Layout of Intelligent Driving, 2025

Research on Overseas Layout of Intelligent Driving: There Are Multiple Challenges in Overseas Layout, and Light-Asset Cooperation with Foreign Suppliers Emerges as the Optimal Solution at Present

20...

High-Voltage Power Supply in New Energy Vehicle (BMS, BDU, Relay, Integrated Battery Box) Research Report, 2025

The high-voltage power supply system is a core component of new energy vehicles. The battery pack serves as the central energy source, with the capacity of power battery affecting the vehicle's range,...

Automotive Radio Frequency System-on-Chip (RF SoC) and Module Research Report, 2025

Automotive RF SoC Research: The Pace of Introducing "Nerve Endings" such as UWB, NTN Satellite Communication, NearLink, and WIFI into Intelligent Vehicles Quickens

RF SoC (Radio Frequency Syst...

Automotive Power Management ICs and Signal Chain Chips Industry Research Report, 2025

Analog chips are used to process continuous analog signals from the natural world, such as light, sound, electricity/magnetism, position/speed/acceleration, and temperature. They are mainly composed o...

Global and China Electronic Rearview Mirror Industry Report, 2025

Based on the installation location, electronic rearview mirrors can be divided into electronic interior rearview mirrors (i.e., streaming media rearview mirrors) and electronic exterior rearview mirro...

Intelligent Cockpit Tier 1 Supplier Research Report, 2025 (Chinese Companies)

Intelligent Cockpit Tier1 Suppliers Research: Emerging AI Cockpit Products Fuel Layout of Full-Scenario Cockpit Ecosystem

This report mainly analyzes the current layout, innovative products, and deve...

Next-generation Central and Zonal Communication Network Topology and Chip Industry Research Report, 2025

The automotive E/E architecture is evolving towards a "central computing + zonal control" architecture, where the central computing platform is responsible for high-computing-power tasks, and zonal co...

Vehicle-road-cloud Integration and C-V2X Industry Research Report, 2025

Vehicle-side C-V2X Application Scenarios: Transition from R16 to R17, Providing a Communication Base for High-level Autonomous Driving, with the C-V2X On-board Explosion Period Approaching

In 2024, t...

Intelligent Cockpit Patent Analysis Report, 2025

Patent Trend: Three Major Directions of Intelligent Cockpits in 2025

This report explores the development trends of cutting-edge intelligent cockpits from the perspective of patents. The research sco...

Smart Car Information Security (Cybersecurity and Data Security) Research Report, 2025

Research on Automotive Information Security: AI Fusion Intelligent Protection and Ecological Collaboration Ensure Cybersecurity and Data Security

At present, what are the security risks faced by inte...

New Energy Vehicle 800-1000V High-Voltage Architecture and Supply Chain Research Report, 2025

Research on 800-1000V Architecture: to be installed in over 7 million vehicles in 2030, marking the arrival of the era of full-domain high voltage and megawatt supercharging.

In 2025, the 800-1000V h...

Foreign Tier 1 ADAS Suppliers Industry Research Report 2025

Research on Overseas Tier 1 ADAS Suppliers: Three Paths for Foreign Enterprises to Transfer to NOA

Foreign Tier 1 ADAS suppliers are obviously lagging behind in the field of NOA.

In 2024, Aptiv (2.6...

VLA Large Model Applications in Automotive and Robotics Research Report, 2025

ResearchInChina releases "VLA Large Model Applications in Automotive and Robotics Research Report, 2025": The report summarizes and analyzes the technical origin, development stages, application cases...

OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025

ResearchInChina releases the "OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025", which sorts out iterative development context of mainstream automakers in terms of infota...