Research on automotive vision algorithms: focusing on urban scenarios, BEV evolves into three technology routes.

1. What is BEV?

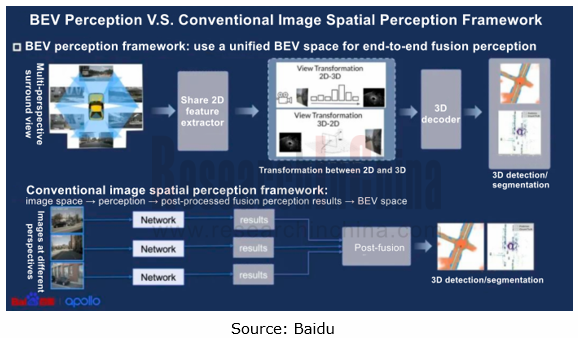

BEV (Bird's Eye View), also known as God's Eye View, is an end-to-end technology where the neural network converts image information from image space into BEV space.

Compared with conventional image space perception, BEV perception can input data collected by multiple sensors into a unified space for processing, acting as an effective way to avoid error superposition, and also makes temporal fusion easier to form a 4D space.

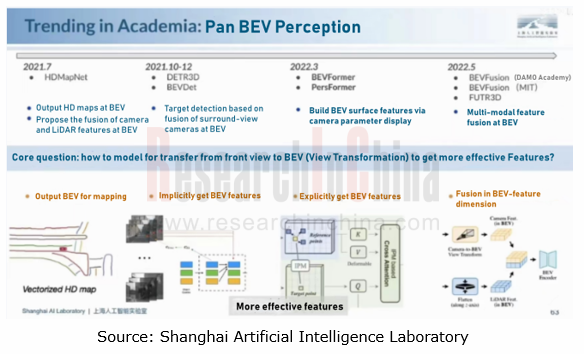

BEV is not a new technology. In 2016, Baidu began to realize point cloud perception at the BEV; in 2021, Tesla’s introduction of BEV draw widespread attention in the industry. There are BEV perception algorithms corresponding to different sensor input layers, basic tasks, and scenarios. Examples include BEVFormer algorithm only based on vision, and BEVFusion algorithm based on multi-modal fusion strategy.

2. Three technology routes of BEV perception algorithm

In terms of implementation of BEV technology, the technology architecture of each player is roughly the same, but technical solutions they adopt are different. So far, there have been three major technology routes:

Vision-only BEV perception route in which the typical company is Tesla;

BEV fused perception route in which the typical company is Haomo.ai;

Vehicle-road integrated BEV perception route in which the typical company is Baidu.

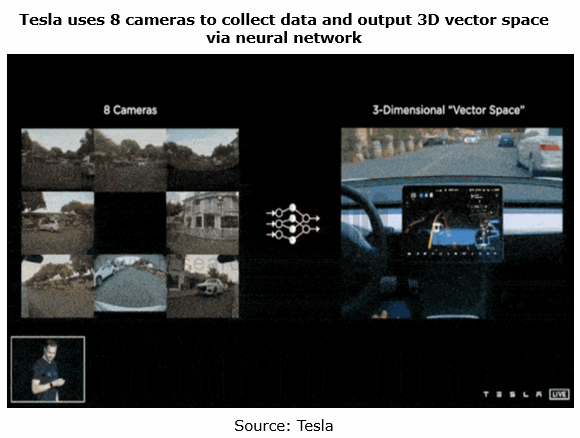

Vision-only BEV perception technology route: Tesla is a representative company of this technology route. In 2021, it was the first one to use the pre-fusion BEV algorithm for directly transmitting the image perceived by cameras into the AI algorithm to generate a 3D space at a bird's-eye view, and output perception results in the space. This space incorporates dynamic information such as vehicles and pedestrians, and static information like lane lines, traffic signs, traffic lights and buildings, as well as the coordinate position, direction angle, distance, speed, and acceleration of each element.

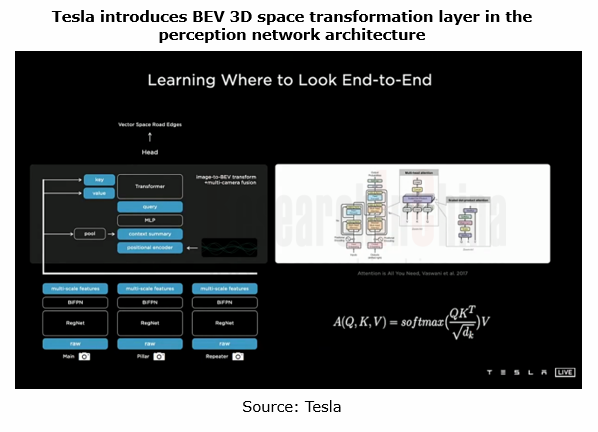

Tesla uses the backbone network to extracts features of each camera. It adopts the Transformer technology to convert multi-camera data from image space into BEV space. Transformer, a deep learning model based on the Attention mechanism, can deal with massive data-level learning tasks and accurately perceive and predict the depth of objects.

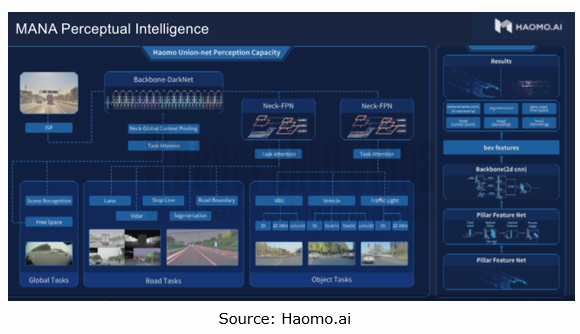

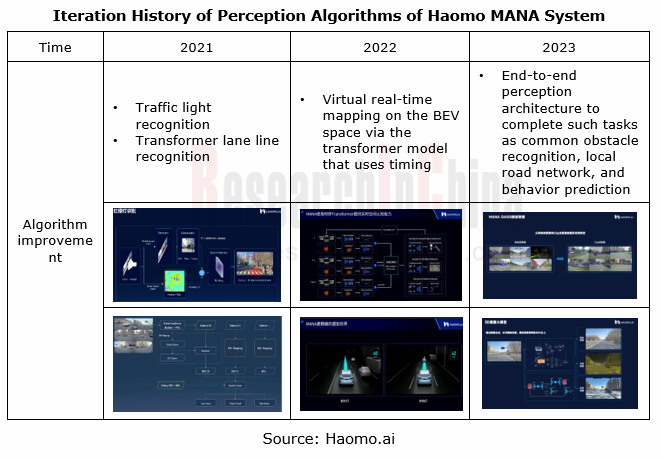

BEV fused perception technology route: Haomo.ai is an autonomous driving company under Great Wall Motor. In 2022, it announced an urban NOH solution that underlines perception and neglects maps. The core technology comes from MANA (Snow Lake).

In the MANA perception architecture, Haomo.ai adopts BEV fused perception (visual Camera + LiDAR) technology. Using the self-developed Transformer algorithm, MANA not only completes the transformation of vision-only information into BEV, but also finishes the fusion of Camera and LiDAR feature data, that is, the fusion of cross-modal raw data.

Since its launch in late 2021, MANA has kept evolving. With Transformer-based perception algorithms, it has solved multiple road perception problems, such as lane line detection, obstacle detection, drivable area segmentation, traffic light detection & recognition, and traffic sign recognition.

In January 2023, MANA got further upgraded by introducing five major models to enable the transgenerational upgrade of the vehicle perception architecture and complete such tasks as common obstacle recognition, local road network and behavior prediction. The five models are: visual self-supervision model (automatic annotation of 4D Clip), 3D reconstruction model (low-cost solution to data distribution problems), multi-modal mutual supervision model (common obstacle recognition), dynamic environment model (using perception-focused technology for lower dependence on HD maps), and human-driving self-supervised cognition model (driving policy is more humane, safe and smooth).

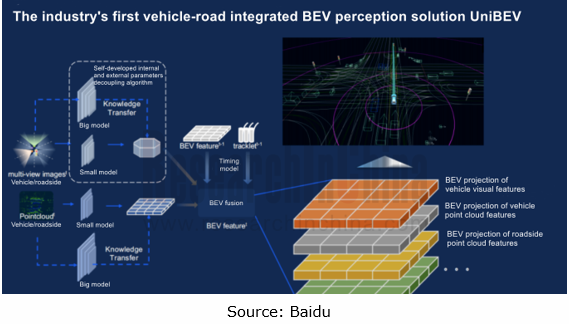

Vehicle-road integrated BEV perception technology route: in January 2023, Baidu introduced UniBEV, a vehicle-road integrated solution which is the industry's first end-to-end vehicle-road integrated perception solution.

Features:

Fusion of all vehicle and roadside data, covering online mapping with multiple vehicle cameras and sensors, dynamic obstacle perception, and multi-intersection multi-sensor fusion from the roadside perspective;

Fusion of all vehicle and roadside data, covering online mapping with multiple vehicle cameras and sensors, dynamic obstacle perception, and multi-intersection multi-sensor fusion from the roadside perspective;

Self-developed internal and external parameters decoupling algorithm, enabling UniBEV to project the sensors into a unified BEV space regardless of how they are positioned on the vehicle and at the roadside

Self-developed internal and external parameters decoupling algorithm, enabling UniBEV to project the sensors into a unified BEV space regardless of how they are positioned on the vehicle and at the roadside

In the unified BEV space, it is easier for UniBEV to realize multi-modal, multi-view, and multi-temporal fusion of spatial-temporal features;

In the unified BEV space, it is easier for UniBEV to realize multi-modal, multi-view, and multi-temporal fusion of spatial-temporal features;

The big data + big model + miniaturization technology closed-loop remains superior in dynamic and static perception tasks at the vehicle side and roadside.

The big data + big model + miniaturization technology closed-loop remains superior in dynamic and static perception tasks at the vehicle side and roadside.

Baidu’s UniBEV solution will be applied to ANP3.0, its advanced intelligent driving product planned to be mass-produced and delivered in 2023. Currently, Baidu has started ANP3.0 generalization tests in Beijing, Shanghai, Guangzhou and Shenzhen.

Baidu ANP3.0 adopts the "vision-only + LiDAR" dual redundancy solution. In the R&D and testing phase, with the "BEV Surround View 3D Perception" technology, ANP3.0 has become an intelligent driving solution that enables multiple urban scenarios solely relying on vision. In the mass production stage, ANP3.0 will introduce LiDAR to realize multi-sensor fused perception to deal with more complex urban scenarios.

3. BEV perception algorithm favors application of urban NOA.

As vision algorithms evolve, BEV perception algorithms become the core technology for OEMs and autonomous driving companies such as Tesla, Xpeng, Great Wall Motor, ARCFOX, QCraft and Pony.ai, to develop urban scenarios.

Xpeng Motors: the new-generation perception architecture XNet can fuse the data collected by cameras before multi-frame timing, and output 4D dynamic information (e.g., vehicle speed and motion prediction) and 3D static information (e.g., lane line position) at the BEV.

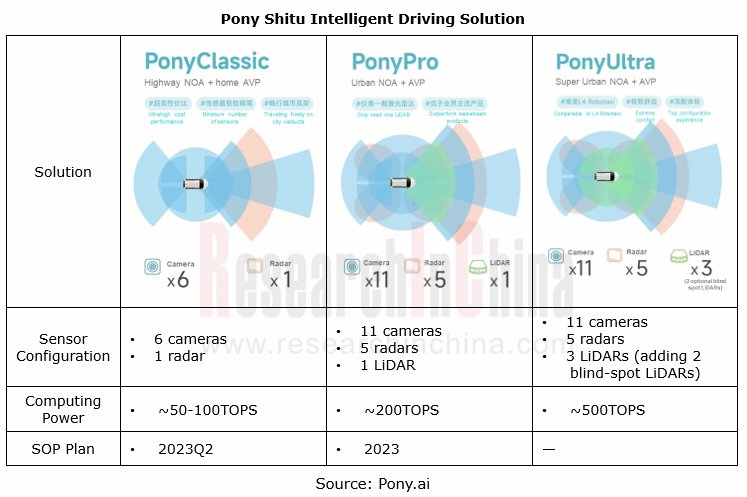

Pony.ai: In January 2023, it announced the intelligent driving solution - Pony Shitu. The self-developed BEV perception algorithm, the key feature of the solution, can recognize various types of obstacles, lane lines and passable areas, minimize computing power requirements, and enable highway and urban NOA only using navigation maps.

Autonomous Driving Domain Controller and Central Computing Unit (CCU) Industry Report, 2025

Research on Autonomous Driving Domain Controllers: Monthly Penetration Rate Exceeded 30% for the First Time, and 700T+ Ultrahigh-compute Domain Controller Products Are Rapidly Installed in Vehicles

L...

China Automotive Lighting and Ambient Lighting System Research Report, 2025

Automotive Lighting System Research: In 2025H1, Autonomous Driving System (ADS) Marker Lamps Saw an 11-Fold Year-on-Year Growth and the Installation Rate of Automotive LED Lighting Approached 90...

Ecological Domain and Automotive Hardware Expansion Research Report, 2025

ResearchInChina has released the Ecological Domain and Automotive Hardware Expansion Research Report, 2025, which delves into the application of various automotive extended hardware, supplier ecologic...

Automotive Seating Innovation Technology Trend Research Report, 2025

Automotive Seating Research: With Popularization of Comfort Functions, How to Properly "Stack Functions" for Seating?

This report studies the status quo of seating technologies and functions in aspe...

Research Report on Chinese Suppliers’ Overseas Layout of Intelligent Driving, 2025

Research on Overseas Layout of Intelligent Driving: There Are Multiple Challenges in Overseas Layout, and Light-Asset Cooperation with Foreign Suppliers Emerges as the Optimal Solution at Present

20...

High-Voltage Power Supply in New Energy Vehicle (BMS, BDU, Relay, Integrated Battery Box) Research Report, 2025

The high-voltage power supply system is a core component of new energy vehicles. The battery pack serves as the central energy source, with the capacity of power battery affecting the vehicle's range,...

Automotive Radio Frequency System-on-Chip (RF SoC) and Module Research Report, 2025

Automotive RF SoC Research: The Pace of Introducing "Nerve Endings" such as UWB, NTN Satellite Communication, NearLink, and WIFI into Intelligent Vehicles Quickens

RF SoC (Radio Frequency Syst...

Automotive Power Management ICs and Signal Chain Chips Industry Research Report, 2025

Analog chips are used to process continuous analog signals from the natural world, such as light, sound, electricity/magnetism, position/speed/acceleration, and temperature. They are mainly composed o...

Global and China Electronic Rearview Mirror Industry Report, 2025

Based on the installation location, electronic rearview mirrors can be divided into electronic interior rearview mirrors (i.e., streaming media rearview mirrors) and electronic exterior rearview mirro...

Intelligent Cockpit Tier 1 Supplier Research Report, 2025 (Chinese Companies)

Intelligent Cockpit Tier1 Suppliers Research: Emerging AI Cockpit Products Fuel Layout of Full-Scenario Cockpit Ecosystem

This report mainly analyzes the current layout, innovative products, and deve...

Next-generation Central and Zonal Communication Network Topology and Chip Industry Research Report, 2025

The automotive E/E architecture is evolving towards a "central computing + zonal control" architecture, where the central computing platform is responsible for high-computing-power tasks, and zonal co...

Vehicle-road-cloud Integration and C-V2X Industry Research Report, 2025

Vehicle-side C-V2X Application Scenarios: Transition from R16 to R17, Providing a Communication Base for High-level Autonomous Driving, with the C-V2X On-board Explosion Period Approaching

In 2024, t...

Intelligent Cockpit Patent Analysis Report, 2025

Patent Trend: Three Major Directions of Intelligent Cockpits in 2025

This report explores the development trends of cutting-edge intelligent cockpits from the perspective of patents. The research sco...

Smart Car Information Security (Cybersecurity and Data Security) Research Report, 2025

Research on Automotive Information Security: AI Fusion Intelligent Protection and Ecological Collaboration Ensure Cybersecurity and Data Security

At present, what are the security risks faced by inte...

New Energy Vehicle 800-1000V High-Voltage Architecture and Supply Chain Research Report, 2025

Research on 800-1000V Architecture: to be installed in over 7 million vehicles in 2030, marking the arrival of the era of full-domain high voltage and megawatt supercharging.

In 2025, the 800-1000V h...

Foreign Tier 1 ADAS Suppliers Industry Research Report 2025

Research on Overseas Tier 1 ADAS Suppliers: Three Paths for Foreign Enterprises to Transfer to NOA

Foreign Tier 1 ADAS suppliers are obviously lagging behind in the field of NOA.

In 2024, Aptiv (2.6...

VLA Large Model Applications in Automotive and Robotics Research Report, 2025

ResearchInChina releases "VLA Large Model Applications in Automotive and Robotics Research Report, 2025": The report summarizes and analyzes the technical origin, development stages, application cases...

OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025

ResearchInChina releases the "OEMs’ Next-generation In-vehicle Infotainment (IVI) System Trends Report, 2025", which sorts out iterative development context of mainstream automakers in terms of infota...